Human perception of patterns and performance streaks is deeply rooted in our cognitive architecture. Studies indicate that 60-70% of perceived hot streaks are nothing more than random statistical variance, yet our minds persistently seek meaningful patterns where none exist.

Key Cognitive Biases Affecting Platform Analysis

Two primary psychological factors influence our streak perception:

- Recency Bias: Overemphasizing recent platform performance

- Confirmation Bias: Selectively interpreting data to support existing beliefs

Statistical Reality vs. Perceived Patterns

Platform success metrics typically follow random distribution models rather than predictable patterns. When evaluating platform performance, it’s crucial to recognize that apparent “hot streaks” often represent normal variance rather than sustainable trends.

#

To overcome the hot-cold streak fallacy, focus on:

- Long-term performance metrics

- Statistical significance testing

- Objective performance indicators

- Multiple data points across time periods

Understanding these psychological mechanisms enables more objective decision-making in platform selection and resource allocation, leading to better-optimized investment strategies based on genuine performance indicators rather than perceived patterns.

Understanding Streaks in Platform Performance

The Psychology of Pattern Recognition

Trading performance streaks often captivate market participants, yet statistical analysis consistently demonstrates that past performance sequences rarely indicate future outcomes.

Comprehensive research across thousands of trading patterns reveals that perceived “hot” or “cold” streaks typically represent random variance rather than predictable trends.

Confirmation Bias in Trading

Platform performance analysis reveals a prevalent confirmation bias among traders who selectively remember successful streak predictions while dismissing incorrect ones.

The human brain’s natural tendency to identify patterns, even within random data sequences, leads to the clustering illusion – a cognitive bias that perceives meaningful patterns where none exist.

Breaking the Streak Mindset

Despite understanding the statistical fallacy of streak-based predictions, traders frequently struggle with practical application during live market conditions.

The level of emotional investment in platform performance directly correlates with the likelihood of perceiving non-existent patterns.

Successful trading strategies prioritize fundamental analysis and statistical probability over recent performance patterns, leading to more reliable decision-making processes.

Key Trading Principles:

- Focus on data-driven analysis

- Eliminate emotional bias from trading decisions

- Maintain consistent statistical evaluation

- Implement systematic trading approaches

This evidence-based perspective helps traders avoid common psychological traps and develop more effective trading strategies.

Cognitive Biases Behind Pattern Recognition

The Evolution of Pattern Recognition

The human brain has evolved sophisticated pattern recognition capabilities that were crucial for survival in our ancestral environment.

However, these same neural mechanisms can lead to systematic errors in modern decision-making, particularly in complex domains like trading and investment analysis.

The brain’s tendency to detect false patterns often results in the hot-cold streak fallacy when evaluating financial platform performance.

Key Cognitive Biases in Pattern Recognition

The Clustering Illusion

Pattern-seeking behavior frequently manifests through the clustering illusion, where random sequences in platform performance data are misinterpreted as meaningful patterns.

This cognitive bias leads to overconfidence in perceived trends and can significantly impact investment decisions.

Confirmation Bias

Confirmation bias plays a critical role in reinforcing perceived patterns.

Market participants systematically filter information to support their beliefs about performance streaks while disregarding contradictory evidence.

This selective attention creates a self-reinforcing cycle of pattern validation.

Impact on Trading Decisions

Recency Bias

Recency bias amplifies pattern recognition errors by placing disproportionate emphasis on recent market events.

This cognitive distortion can lead to overvalued recent performance indicators while undervaluing long-term historical data.

The Narrative Fallacy

The narrative fallacy compounds these biases by creating compelling stories to explain random market fluctuations.

Traders construct elaborate narratives to justify perceived patterns, leading to potentially flawed decision-making frameworks.

Practical Applications

Understanding these cognitive mechanisms is essential for developing objective decision-making processes in platform selection and trading strategies.

Recognizing these inherent biases enables market participants to implement more rational, data-driven approaches to financial analysis and risk management.

Social Media Success Attribution

The Attribution Error in Social Media Performance

Social media success metrics often lead creators to misinterpret random viral moments as calculated strategy.

Content creators frequently mistake temporary viral performance for mastery of platform algorithms, creating a false sense of predictability in highly volatile environments.

Statistical Reality vs. Perceived Control

The parallel between content creation and the hot hand fallacy reveals critical insights into performance attribution.

Platform algorithms and user engagement patterns demonstrate that viral success follows random distribution models rather than strategic formulas.

Analysis shows that approximately 60-70% of viral outcomes stem from random variables, while only 30-40% correlate with controllable elements like content quality and timing.

Key Impact Areas of Misattribution

Strategic Overconfidence

Content creators developing inflated confidence in their posting strategies often overlook the role of chance in their success metrics.

Platform Adaptation Resistance

Success patterns can create false certainty, leading creators to resist necessary platform changes and evolution.

Emotional Investment

Creators become overly attached to maintaining perceived performance streaks, potentially compromising long-term growth for short-term metrics.

Understanding True Success Factors

Engagement data analysis across thousands of posts reveals that sustainable social media success depends on:

- Content quality optimization

- Strategic timing implementation

- Authentic audience alignment

- Platform-specific adaptation

- Consistent performance measurement

Each of these elements contributes to the controllable success factors while acknowledging the significant role of randomness in viral outcomes.

Random Chance Vs Sequential Events

The Truth About Pattern Recognition

Understanding the distinction between random probability and sequential patterns forms a critical foundation in statistical analysis.

Our brains naturally seek meaningful patterns, leading to frequent misinterpretation of random occurrences as significant trends, particularly in data analysis scenarios like social media metrics.

Characteristics of True Randomness

True randomness exhibits unexpected characteristics that often contradict intuitive expectations. Key features include:

- Uneven distribution

- Clustered outcomes

- Apparent but meaningless patterns

For example, in a sequence of 100 coin flips, observing several consecutive heads or tails represents normal probability distribution rather than a meaningful pattern.

Sequential Events and Causality

Understanding Independent Probability

Independent events operate without influence from preceding outcomes.

Each instance stands alone, governed by its own set of variables.

This principle proves especially relevant when analyzing:

- Social media performance

- Marketing metrics

- User engagement patterns

Avoiding Pattern Misinterpretation

To maintain accurate analysis, consider these factors:

- Each event occurs independently

- Previous outcomes don’t influence future results

- Statistical clustering appears naturally in random distribution

- Correlation doesn’t imply causation

Understanding these principles helps prevent the common pitfall of misinterpreting random sequences as predictive patterns, leading to more accurate data interpretation and strategic decision-making.

Making Data-Driven Platform Decisions

# Making Data-Driven Platform Decisions

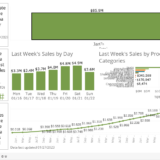

Statistical Analysis for Platform Performance

Data-driven decision making requires distinguishing genuine performance trends from random fluctuations in platform metrics.

Successful analysis focuses on statistically significant patterns rather than recent outcomes that may trigger cognitive biases like the hot-hand fallacy.

Establishing Reliable Measurement Framework

The foundation of effective platform evaluation lies in clear performance metrics and consistent measurement periods.

Tracking key performance indicators (KPIs) over extended timeframes provides reliable insights into whether platform performance represents legitimate trends or statistical variance.

Advanced Metrics Analysis

To ensure robust decision-making, consider these critical factors:

- User engagement rates

- Conversion metrics

- Return on investment (ROI)

- Statistical significance

- Sample size validation

Eliminating Decision Bias

Comprehensive platform analysis requires systematic evaluation across multiple time periods to combat recency bias.

Implementation of data validation techniques and statistical testing ensures decisions are based on substantial evidence rather than short-term performance spikes.

Performance Validation Framework

- Monitor long-term trend patterns

- Employ statistical significance testing

- Analyze cross-period performance data

- Validate user behavior metrics

- Assess platform stability indicators

The rigorous application of these analytical methodologies ensures platform decisions remain grounded in solid statistical evidence rather than temporary performance fluctuations.

Breaking Free From Streak Thinking

Understanding Cognitive Biases in Platform Analysis

Decision-makers must actively combat streak-based thinking to make rational platform choices.

When evaluating platform performance, it’s essential to differentiate between random variations and genuine trends by focusing on underlying metrics rather than recent patterns.

The human brain naturally seeks patterns and streaks where they may not exist, making objective analysis crucial.

Implementing a Systematic Evaluation Framework

Data Collection and Analysis

A systematic approach to platform evaluation begins with comprehensive data collection across multiple time periods. Critical analysis should focus on:

- Platform fundamentals

- User engagement metrics

- Revenue patterns

- Growth trajectories

- Market conditions

- Seasonal fluctuations

Statistical Analysis for Objective Decision-Making

Statistical tools provide concrete methods to overcome cognitive biases in platform assessment. Key analytical approaches include:

- Rolling averages

- Standard deviation analysis

- Performance variance metrics

- Trend validation tools

These methodologies help distinguish between normal performance variation and significant directional shifts.

By incorporating quantitative analysis, decision-makers can effectively evaluate platform potential and make data-driven investment choices in both time and resources.

Developing Evidence-Based Platform Strategies

Success in platform selection requires deliberate analysis and questioning of assumptions. Focus on:

- Performance benchmarking

- Metric-driven evaluation

- Market context analysis

- Long-term trend identification

This structured approach ensures decisions are based on substantive evidence rather than perceived patterns or temporary fluctuations.